Definition, History, Usage and Future of Computer Data Storage

or how technological improvements challenge mass media with individual media

by Lev Lafayette. Published in Organdi, a peer-reviewed journal of culture, creation, and critique - Issue 9, 2007

Definition

Defining electronic data storage, as the elaborations in this section will indicate, is no trivial task. A brief introduction would define it as any device which requires electrical power to record information. Computer data storage, distinct from electronic data storage, requires a computer system to read and manipulate the encoded data. Storage makes up one of the core components of a computer system along with the central processing unit, as defined in the von Neumann model which has been in use since the 1940s. Without storage ability, a computer becomes a signal processing device (e.g., a CD player). Encoded data may be stored as persistent or volatile memory (e.g., hard disk versus RAM), it may be mutable or read-only (e.g., hard disk versus WORM CD/DVD ; "write once, read many”). It may have the ability to access storage sequentially or variably (e.g., tape storage versus random access memory).

The division of primary, secondary, and tertiary storage is mainly based on distance from the central processing unit with resulting differences in access methods. Primary storage is directly connected to the central processing unit of a computer system. This includes internal registers and accumulators, internal cache memory, and random access memory directly connected to the CPU via a memory bus. Apart from their typical volatility [1], the most significant difference between primary storage and other forms is the speed and method of access.

This difference in access method is evident when comparing primary to secondary data storage. Secondary storage uses the computer’s i/o channels and invariably provides persistent information. Modern operating systems also use secondary storage to provide "virtual memory" or "swap" space, an accessible portion of secondary storage which enhances the capacity of primary memory. The other key difference between primary and secondary memory is persistence ; where it is possible that primary storage may be persistent, it is axiomatically necessary for secondary storage. Hence, secondary storage is often referred to as "mass storage", "offline storage" and the like. Both its persistence and access methods result in relative slowness compared to primary data storage [2], but with greater capacity.

Tertiary and network storage provides a third type of computer data storage. In this instance the access method requires a local or network mount and unmount procedure or, in many network cases, a separate connect and disconnect procedure. Whilst the storage medium is usually identical to that of secondary storage, although almost invariably with a significantly greater scale, the access method is significantly slower due the greater distance from the CPU. One can site network-attached storage (NAS) systems and Storage Area Networks (SAN) [3] as example of tertiary and network storage.

Various technical metrics can be used to analyse the performance of computer storage. Capacity is total amount of information that a medium can hold and is usually expressed in bytes (e.g., 512 megabytes of RAM, 60 gigabytes of HDD). Whilst less of a factor to most users, density refers to the compactness of the information ; the storage capacity divided by the area or volume (e.g., 2 megabytes per square centimeter). Far more important is latency and throughput. The former refers to the time taken to access a location in storage, and the latter the rate that data can be read from or written to the storage device. Both of these are variable and depend on existing congestion, fragmentation and so forth and are typically differentiated by read and write, minimum, maximum and average.

Computer data storage can also be differentiated by the technological medium, of which magnetic, semiconductor, and optical are compared. Magnetic storage uses non-volatile, high-density, patterns of magnetisation to store information. Access is sequential, hence the use of sophisticated seek and cycle procedures. Typical use in contemporary computer systems is for secondary storage in the form of hard-disks or magnetic tapes, for tertiary and off-line storage mechanisms. Semiconductor integrated circuit storage utilises transistors or capacitors to store data. It is typically used for primary storage but is increasingly used as secondary storage in the form of persistent flash memory. Finally persistent and sequential optical storage uses pits etched in the surface of the medium which is read from the reflection caused by laser diode illumination. A variation is magneto-optical which uses optical disc storage with a ferromagnetic suface. Typical contemporary technologies for optical storage include CD-ROM, (HD) DVD, Blu-Ray (BR), and Ultra Density Optical (UDO).

Table 1. Summary of Storage Types and Typical Capacity in Contemporary Desktop Systems

| Storage | Capacity | Access Method | Access Speed |

| Primary | 4 GB | Direct/Bus | 800 Mbytes/s |

| Secondary | 500 GB | I/O Channels | 3.0 Gbits/s |

| Tertiary | 5 TB | Ethernet/Network | 100 MBits/s |

History

The history of the various types of data storage can be expressed in terms of the typical technologies used and applying the technical metrics, along with financial cost. The changes that occur can be presented as an elaboration of the empirical observation made by Gordon Moore, co-founder of Intel, in 1965, concerning the number, and therefore density, of transistors on a minimum cost integrated circuit doubling every twenty-four months [4] and usually referred to as "Moore’s Law". Moore has since claimed that "no exponential is forever" and has noted that integrated circuit complexity is somewhat behind projections made in 1975, but nevertheless contemporary technologies are still within reach of these optimistic goals, or "we can delay ’forever’". [5]

Table 2. Moore’s Law. From : http://www.intel.com/technology/mooreslaw/pix/mooreslaw_graph2.gif

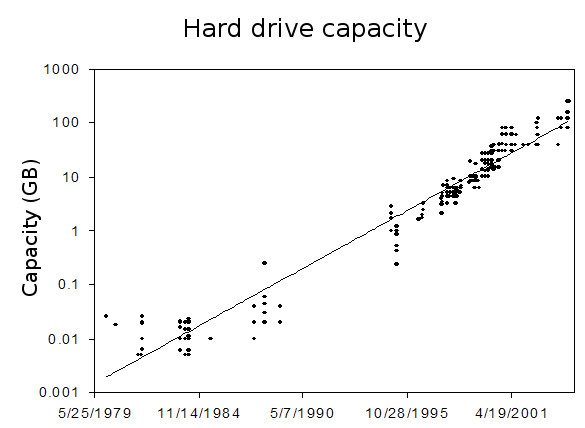

The specific application of Moore’s has since been expanded to refer to a range of computing units. One particular application refers to secondary and tertiary storage, known as Kryder’s Law, refers to advances in hard disk storage per square millimetre (originally phrased as per square inch), which has had enormous changes in density metrics and cost metrics : "Since the introduction of the disk drive in 1956, the density of information it can record has swelled from a paltry 2,000 bits to 100 billion bits (gigabits), all crowded in the small space of a square inch. That represents a 50-million-fold increase. Not even Moore’s silicon chips can boast that kind of progress." [6]. The same however can not be said for various hard drive metrics overall ; the rate of increase has been roughly similar to that of transistor count, although recent trends show that it is slowing somewhat. The following table provides some historical examples of secondary storage devices, including the cost per megabyte in US dollars. The transition from tape, floppy drives and hard disk drives should be self-evident.

Table 3 : Secondary Storage Capacity and Price : Some Historic Examples

| Year | Manufacturer | Size | Price for Unit | Price Per Megabtye |

| 1955 | IBM | 12 megabytes | $74800 | $6233 |

| 1980 | Unspecified | 204 kilobytes | $695 | $3488 |

| 1982 | Lobo | 19 megabtyes | $5495 | $289 |

| 1984 | Unspecified | 720 kilobytes | $398 | $566 |

| 1986 | Unspecified | 20 megabytes | $489 | $24.45 |

| 1988 | Seagate | 30 megabytes | $299 | $9.97 |

| 1990 | Compusave | 702 megabytes | $2295 | $3.27 |

| 1992 | Fujistsu | 2000 megabytes | $2799 | $1.33 |

| 1994 | Quantum | 1800 megabytes | $964 | $0.536 |

| 1996 | Quantum | 2000 megabytes | $319 | $0.128 |

| 1998 | Quantum | 12056 megabtes | $349 | $0.0290 |

| 2009 | Maxtor | 81900 megabytes | $295 | $0.00385 |

| 2002 | IBM | 120000 MB | $146 | $0.00122 |

| 2004 | Hitachi | 160000 MB | $97.50 | $0.000609 |

| 2006 | Western Digital | 400000 MB | $105.93 | $0.000265 |

A further application of Moore’s Law relevant to this discussion is the development of primary storage. Again, similar results are evident with increasing size and decreasing price per unit and per megabyte [7]. As the table indicates, there has been increases in memory size of an inconsistent rate, and similar inconsistencies in price decreases per megabyte. In this particular context, RAM almost invariably refers to motherboard added integrated circuits, rather than on-die cache memory, paging systems or virtual memory and swap spaces on secondary storage [8].

Table 4 : Random Access Memory Capacity and Price : Some Historic Examples

| Year | Page Company | > Size (meg) |

Price for Unit | Price Per Megabtye |

| 1957 | C.C.C. | 0.00000098 | $392 | $411,041,792 |

| 1979 | SD Sales – Jade | 0.064 | $419 | $6,704 |

| 1981 | Jade | 0.064 | $280 | $4,375 |

| 1983 | California Digital | 0.256 | $495 | $1,980 |

| 1985 | Do Kay | 0.512 | $440 | $859 |

| 1987 | Advanced Computer | 3.072 | $399 | $133 |

| 1989 | Unitex | 4.096 | $753 | $184 |

| 1991 | IC Express | 4.096 | $165 | $41 |

| 1993 | Nevada | 4.096 | $159 | $39 |

| 1995 | First Source | 16.384 | $499 | $31 |

| 1997 | LA Trade | 32.768 | $104 | $3.17 |

| 1999 | Tiger Direct | 131.072 | $99.99 | $0.78 |

| 2001 | StarSurplus | 262.144 | $49 | $0.187 |

| 2003 | Crucial | 524.288 | $65.99 | $0.129 |

| 2005 | New Egg | 1048.576 | $119 | $0.113 |

| 2007 | New Egg | 1048.576 | $79.98 | $0.078 |

Computer technologies have, and continue to develop, at significantly different rates. RAM speed and hard drive seek speeds, for example, improve particularly slowly - a few percent per year at best. The price of most components has declined at an enormous rate over past decades, and so has the general capacity for performance (instructions per second, hard drive capacity and density). Table 5 below shows how fast prices fall : 100 to 1000 times cheaper every 10 years, depending on the device

Table 5 : Historical cost of computer memory and storage

One area which will require further investigation and is particularly related to tertiary storage capabilities is the cost of networking technologies, such as ethernet NICs (network interface cards), coaxial and twisted pair cabling etc, along with the importance of TCP/IP layer two technologies (hubs, switches) in enhancing overall throughput. Viewed with the most simple metric, local area networking cabling capacity in an average installation has increased from a capacity of 2 megabytes per second in the 1980s, 10 megabytes per second in the 1990s, to 100 megabytes per second in this decade and with gigabit installations increasingly common. A general rule that can be used for Ethernet technology is a tenfold increase in bandwidth per decade. Manufacturers are currently developing working on a higher-speed version of SONET (Synchronous Optical Network), boosting bandwidth from 10Gbps to 40Gbps.

Table 6. Hard Drive Capacity over Time From : http://en.wikipedia.org/wiki/Image :Hard_drive_capacity_over_time.png

Usage

With the experience of early versions of MS-Windows and the release of MS-Windows 95/MS-DOS 7.0, Niklaus Wirth pithily remarked : "Software gets slower faster than hardware gets faster" [9] which is sometimes cited as the antithesis of Moore’s Law. Memory requirements of standard workstation systems increased significantly with the new releases of operating system and applications software. A core cause is software development, through the use of such aids Integrated Development Environments (IDEs) and visual programming produce material that is closer to the needs of users but further from the computer’s instruction set, requiring additional layers of interpretation.

A related issue is that as computer software becomes more "user friendly" it requires more machine resources. Whilst this is a trivial statement in itself, the consequences are important when a comparison is made between the system resources recommended for the operating system of common applications and the standard system hardware available at the time of release. For the purposes of this discussion, comparisons are made between the resources requirements of the Microsoft Windows operating systems (as the most common desktop environment), the Microsoft Office application suite, and Intel computers [10].

A standard desktop in 1997 would probably have a hard disk of 2-4 gigabytes with 8-16 megabytes of RAM and Pentium II CPU, capable of c1000 MIPS. Tertiary storage, through the use of modems up to 56kbps was normal. The operating system would probably be a version of MS-Windows 95 operating system whose recommended specifications were a 486 or higher (c50 MIPS), 8 megabytes of RAM, and a typical installation of 50-55 MB of disk space. The application suite, MS-Office 97 required the same processor, up to 12 megabytes of RAM if MS-Access was used (8 otherwise), and has a typical install of 125 MB of disk space. In other words, processor requirements were more than adequate, RAM was pushed to its limits resulting in disk thrashing of virtual memory, and less than an eighth of the typical HDD would be used for the operating system and application suite.

Five years later, the standard desktop would have between 40-80 gigabytes of disk space with 128-256 megabytes of RAM and a Pentium III, capable of c2500 MIPS. The use of Digital Subscriber Line technologies was common. The operating system would be MS-Windows NT 5.1 Professional, also known as Windows XP. The recommended system requirements for this operating system would be a Pentium with at least 300MHz (the Pentium III had between 450 MHz to 1.4 GHz), 128 megabytes of RAM, and at least 1.5 gigabytes of disk space. The MS-OfficeXP application suite actually required a lower-powered Pentium (133 MHz), 128 megabytes of RAM, plus 8 MB per application running, and required approximately 250 MB of disk space. Again, processor requirements are more than adequate, RAM is still in short supply on inexpensive machines, but disk space requirements has fallen significantly to approximately 1/50th of total disk space.

Today, a standard desktop is likely to come with 120-500 gigabytes of disk space with 1.0 to 4.0 gigabytes of RAM and a Celeron D or Pentium IV processor capable of approximately 10000 MIPS. High-end DSL (e.g., ADSL2), providing rates of up to ten times the preceding technology is common. The Windows Vista (Business Edition) recommends 1 GHz processor, 1 gigabytes of RAM, and 15 GB of hard disk storage. Again the application suite (MS-Office Professional 2007) requires a lower-end processor (500 MHz), less memory (256 megabytes) and 2 gigabytes of hard disk. In this instance, processor requirements remain more than adequate, memory issues are increasingly resolved and hard disk usage remains low.

These three examples correlate with anecdotal evidence concerning usage ; as the requirements for operating systems and core applications declines in proportion to total system usage, more system requirements are available for user data. Further, as there is improvement in total system resources, user data will increasingly be of the form of media which previously required prohibitive resources. As an example, a plain-text file of 100 000 words (approximately 300 pages) requires approximately 1 megabyte of disk space whereas an uncompressed audio file requires around 10 megabytes per minute of music, and video requirements approximately 100 megabytes per minute of footage (even though highly variable).

Future

By most accounts the trends elaborated in this article seem destined to continue. Recent developments by IBM in deep-ultraviolet optical lithography and the development of Penryn chips by Intel this year [11] suggest that the challenge of physical limits to CPU will remain surmountable for at least another decade or longer. Such continuing exponential changes have results which seem improbable to casual users ; within a decade personal computers would have processes that could provide clock rates of 100 GHz and close to 100 billion instructions per second ; 10 to 15 terabytes of hard disk storage, and 64 to 128 gigabytes of RAM [12]. To put this technical information into the perspective of media use, such capacity would mean the personal archiving of up to one thousand feature length films without off-line storage, over ten thousand CD-length recordings, and one million books. For comparison’s sake, the entire National Archives of Britain, with 900 years of written material, holds close to 600 terabytes of data. The following table provides a probable technological trajectory and data storage of typical media.

Table 7. Typical Future Desktop Systems and Storage Capacity

| Year | HDD Capacity | RAM Capacity | CPU BIPS | Films | CDs | Books |

| 2008 | 500 GB | 4 GB | 10 | 50 | 500 | 500 000 |

| 2010 | 1 TB | 8 GB | 20 | 100 | 1 000 | 100 000 |

| 2012 | 2 TB | 16 GB | 40 | 200 | 2 000 | 200 000 |

| 2014 | 4 TB | 32 GB | 60 | 400 | 4 000 | 400 000 |

| 2016 | 8 TB | 64 GB | 80 | 800 | 8 000 | 800 000 |

| 2018 | 16 TB | 128 GB | 100 | 1 600 | 16 000 | 1 600 000 |

| 2020 | 32 TB | 256 GB | 200 | 3 200 | 32 000 | 3 200 000 |

Initially it would seem that the future would witness an exponential acceleration in the thematic considerations oft-commented on in postmodernist theory ; the emphasis of style over substance, disposable culture, the celebration of surface over depth, the application of shock value for neo-isms and so forth. However, it must be emphasised that this only represents one demographic - those who remain largely ignorant (whether by choice or lack of opportunity) of technical considerations. Another demographic surely must include those who are aware of capacity and yet still care about issues such as bandwidth, storage use, clock-cycles etc. as they realise that extracting the most from a computer system requires reducing resource use to a minimum on each and every application. Given that such people are those largely responsible for systems administration and technical development in the first place, it would seem that the possibility of quality within quantity remains at least a possibility -especially given the ability for self-publication and community content development. Essentially with both production and storage decentralised, the capacity of "mass media" to dominate culture is significantly weakened.

A significant driver in such an approach is the growth of home networking. The adaptability of computer input/output systems provides the opportunity for centralised format and storage of media which has been hitherto diverse. An inevitable result of this is that non-computer storage and input/output devices increasingly become networked, along with various "smart-devices", such as game consoles, PDAs, entertainment units etc. Individual interest in ensuring minimisation of personal costs and maximising utility provides an incentive for archival quality. An interesting correlation to such incentives is the adoption of operating system technologies which are best suited to networking (i.e., various forms of UNIX, Linux, Mac OS X etc) ; which in itself has a highly developed subculture [13].

The purpose of this article has been to provide an overview of changes in computerised storage technology with an individual focus. Rapid technological improvements have been consistent over the past fifty years, in most cases roughly doubling in performance or capacity every two years. At the same time the relative requirements of operating systems and applications declines in proportion to capacity available for user data, thus providing the increasing opportunity for high bandwidth multimedia. This capacity, which may allow aesthetic and cultural improvement by sheer quantitative capacity is countered by the inducements of technology itself and a very strong culture around the necessary technologies. Mass media, "the culture industry", the dominant producer and distributor of archival content throughout the history of modernity, is now challenged by decentralised democratic forces embodied in technology itself.

References

[1] There are many examples of persistent primary memory, such as ROM (Read-Only Memory), EPROM (erasable, programmable read only memory), magnetic core memory was both primary and non-volatile etc. Flash memory uses semi-conductor integrated circuits however is typically used for secondary storage

[2] In a contemporary computer system (with solid-state primary storage and rotating electro-magnetic secondary storage), typically a million times slower with a thousand times more memory. Optical secondary storage devices are slower still

[3] The key distinction between the two is that NAS presents file systems to client computers whereas SAN provides disk access at the block level, leaving the attached system to provide file management to the client

[4] Gordon Moore, "Cramming more components onto integrated circuits", Electronics Magazine, 19 April 1965

[5] Gordon E. Moore, presentation at International Solid State Circuits Conference (ISSCC), February 10, 2003

[6] Chip Walter, "Kryder’s Law", Scientific American, August 2005

[7] Derived from ongoing research by John McCallum (retired), former Associate Professor in Computer Science, National University of Singapore

[8] Any technique which gives the impression of contagious memory is referred to as "virtual memory". This may include using secondary memory to extend primary memory. Such techniques can be differentiated as paging which, in a contemporary sense, means the transfers of pages between primary memory and secondary storage, and swapping which refers to only transfers to a dedicated swap space, partition or file

[9] László Böszörményi, Jürg Gutknecht, and Gustav Pomberger (Editors), The School of Niklaus Wirth : The Art of Simplicity, Morgan Kaufmann Publishers, 2000

[10] Most of the system resources material has been derived from Microsoft Knowledge Base articles : http://kb.microsoft.com

[11] Meet the World’s First 45nm Processor, Intel Corporation, 2007 http://www.intel.com/technology/silicon/45nm_technology.htm ?iid=homepage+42nm

[12] The one area which development is notably weak is seek times. Seek times is a metric for the time required by a disk head to physically move to the correct part of a disk to read data. Naturally enough, with a larger disk this metric can be greater. Improvements in seek times are significantly slower than improvements in disk size which creates a bottleneck. New file systems are being developed to address this challenge (yet of varying capacities). See Chris Samuel, "Emerging Linux file systems", Linux World, 6 and 9 September, 2007. Available at : http://www.linuxworld.com/news/2007/090407-emerging-linux-filesystems.html

[13] Eric Raymond, The Art of Unix Programming, Addison-Wesley, 2003 and Tom Videan Steele, Eric S. Raymond, The New Hacker’s Dictionary (3rd edition), MIT Press, 1996

Please note that there was an uncorrected error in the original article in Organdi in Table 7 which left out the year 2016. This copy fixes that error. Organdi also presented all table information as images. This has also been corrected here.